- Canon Community

- Discussions & Help

- Camera

- EF & RF Lenses

- Re: I am debating between the 100-400 mm F4.5-5.6 ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-28-2014 11:58 AM

I would like to purchase one of the two lenses listed above. Looking for advice? I will take mostly wildlife and some sports pictures. I want it to be super sharp! Thanks so much.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-28-2014 02:34 PM

300 mm + a crop body is a tight field of view. Personally I'd go the 100-400 route. I have owned the 300 f4 & now own the 300 f2.8 but find my 100-400 much more useful.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-29-2014 12:45 PM - edited 07-29-2014 05:17 PM

@TCampbell wrote:

@cale_kat wrote:For a 70D this is easy, go for the 100-400. You won't be getting f/4 mounting a 300mm f/4 on a crop sensor body, read here http://forums.usa.canon.com/t5/EOS/Canon-6D-vs-Canon-70D-vs-Canon-7D/td-p/105947/page/4

Because the 300mm f/4 will perform as a f/6.4 on the crop sensor sized 70D, you might as well go with the longer 100-400 (f/7.2 - 9). If you're going to spend big money on a f/6.4 to get a single "reach", you already know the "sweet spot" in your photography and are in no need of convincing.

Crop-sensors don't work like this.

The 300mm f/4 lens is always a 300mm f/4 lens. The 100-400 is always an f/4.5-5.6 lens. You don't lose f-stops by mounting the lens to a crop-frame body.

What you are doing, is cropping and enlarging. You are capturing a tighter angle of view because the chip is smaller and thus enlarge it more to get the same size output image. This does not change any of the physics of the lens.

Tim, I know about the cropping but where do you get the enlarging? The output size is not the same because there simply is no information from which to make it the same size. This is a function of the sensor size, not the lens.That's why I use the words "... performs as..."

At maximum aperture, which is what we're interested in, the Full Frame sensor will be filled by the image projected by an EF lens. When mounted to a crop sensor body, this same lens is simply incapable of delivering as much light to the sensor as before.

In the composite image below the cat on the left was shot using a Full Frame Camera with a 50mm lens at f/2.8 1/1000 sec 100 ISO. The cat on the right was shot using a Crop Frame Camera with a 50mm lens at f/2.8 1/1000 sec 100 ISO. The distance to the subject remained fixed by way of a tripod. Note that the image on the left contains all the information (light) as the image on the right AND it includes additional information on all sides.

Even though the lenses were not identical the loss of control is evident because the Crop Frame image (right) is maxed out in terms of its ability to gather light. But I could move further away from the subject with the Crop Frame camera to achieve the same information as is captured by the Full Frame camera. Unfortunately doing so would cause a loss of control.

The image below was shot with the Crop Frame camera positioned further away from the subject to allow me to include all the information that was in the original Full Frame image. Even though all the lens settings, with the exception of focus, were unchanged, you can recognize that the new Crop image has a different "feel". Much of the original background blur is gone. As the camera is physically moved away from the subject, the light reaching the sensor is more organized and less difuse in those areas outside the DOF. This is a real loss of control and is a direct result of sensor size.

Here' s a test. Stand 10 feet from a friend. Ask the friend to throw a handful of rice at you while you hold the cup out to catch the rice. Count the rice grains or weigh them. Now do the same thing when standing 15 feet from your friend. This time your cup will collect less rice. The reason is that only the rice that is more or less coming straight at the cup will be caught at 15 feet. At 10 feet you catch some of the rice that is scattering every which way as it reaches the cup in addition to the rice that is coming straight on.

The lens projects an image circle into the camera body. Some of the image lands on the area occupied by the sensor.... some spills off the sides and isn't captured in the image.

We're on the same page here...

You could take a full-frame body, take the same photo using the same focal length, f-stop, and subject distance... but then crop the resulting image so that you're just using the center area and THEN "enlarging" that image so that it's the same print size as the original uncropped image. It would be as though you've "zoomed in" (when really you just cropped and enlarged).

This is why people refer to a 300mm lens on an APS-C camera as though it works like a 480mm lens. It isn't really a 480mm lens... but it's been cropped and enlarged to get a result similar to what you'd get if you had taken the shot with a 480mm lens using a full-frame camera.

I get it. Really, I do. But the issue isn't the cropping. The issue is the loss of light.

You only lose f-stops when you use a teleconverted.

Why do you think this is? Is it possible that the teleconverter actually reduces the amount of light that the sensor "sees"? Where do you see the loss of light? Because, I see a extra tube, lengthening the lens barrel and creating a larger image circle to be projected at the sensor. The result is just more light "missing" the sensor.

Each f-stop is based on a power of the square root of 2 (which is a value very close to 1.4). That's actually *why* the common teleconverters are 1.4x and 2x (1 x √2, and 2 x √2). When you increase the diameter of a circle by the √2, the area of that circle exactly doubles. This is why, when you reduce the size of useable aperture by a factor of the √2 (one full stop), you exactly "halve" the area through which the light can pass and thus exactly "halve" the amount of light delivered into the camera (per unit area)..

When you use a 1.4x teleconverter, you lose one full stop of aperture. The aperture didn't physically get smaller... the lens got longer.

Actually the aperture did get physically smaller. Aperture is a function of lens opening and length. You can't maintain the same maximum aperture if you alter the length, which you have by adding the teleconverter, without also enlarging the lens opening, which you can't.

The ratio of dividing the same diameter opening into a longer focal length means that the ratio was altered... by exactly one stop.

And what is an aperture if not a ratio?

When you use a teleconverter, you lose a stop (or two... depending on which teleconverter is used). When you don't use a teleconverter, but merely move the lens to a camera body which has a smaller sensor, nothing actually changes except the angle of view captured on the chip.

Tim, if I might make a comment. You appear to give more weight to the aperture having a fixed value when manufactured. But the value is not the same thing as ability to capture light. Its meaning as a ratio is tied to the "35mm" image circle it projects. You can't capture as much light, at maximum aperture, with a smaller sensor.

Here's a test. Hold two measuring cups* of the same size together and stand out under a sprinkler for a few minutes. Now measure how much water you captured. Repeat the same with a single measuring cup, same size as before. Measure how much you captured after the same amount of time. I guarantee that you will capture more water with two cups than you would with one.

Maximum aperture is nothing more than a measure of how much light you can capture for a given focal length. You will never be able to capture the same amount using fewer cups.

* Measuring cups are a used to represent the area of the sensor. I.E. a two-cup sensor has more area than a one-cup sensor. This has nothing to do with the number of "cups" that any given sensor may contain because it is widely know that sensor cell-size can differ.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-29-2014 07:37 PM

Hi Cale,

This was starting to become rather long and I noticed comments kept repeating what was effectively the same point, so I took the liberty of reducing it somewhat. My comments are in blue below.

@cale_kat wrote:Tim, I know about the cropping but where do you get the enlarging? The output size is not the same because there simply is no information from which to make it the same size. This is a function of the sensor size, not the lens.That's why I use the words "... performs as..."

If you want an output image of a given size (e.g. a 4 x 6" photo for example), then you'll need to enlarge the image from the crop-sensor camera more than the image from the full-frame camera in order to achieve that size.

At maximum aperture, which is what we're interested in, the Full Frame sensor will be filled by the image projected by an EF lens. When mounted to a crop sensor body, this same lens is simply incapable of delivering as much light to the sensor as before.

To avoid confusion... the amount of light delivered into the camera body is identical. The amount of light which lands on the sensor when using equal unit-areas of center (e.g. pick one square centimeter for example) would be identical.

Each invididual photo-site (or "pixel" if you want to think of it as a debayered image) does not know how many neighboring photosites it has and whether they are receiving light or not. It only knows how much light it is receiving.

In the composite image below the cat on the left was shot using a Full Frame Camera with a 50mm lens at f/2.8 1/1000 sec 100 ISO. The cat on the right was shot using a Crop Frame Camera with a 50mm lens at f/2.8 1/1000 sec 100 ISO. The distance to the subject remained fixed by way of a tripod. Note that the image on the left contains all the information (light) as the image on the right AND it includes additional information on all sides.

Even though the lenses were not identical the loss of control is evident because the Crop Frame image (right) is maxed out in terms of its ability to gather light. But I could move further away from the subject with the Crop Frame camera to achieve the same information as is captured by the Full Frame camera. Unfortunately doing so would cause a loss of control.

I'm not sure what you mean by "loss of control". If the photographer changes their subject distance to compensate for the different angle of view, they are simply recomposing the shot. I'm not sure that's a "loss" ... it's just "different".

The image below was shot with the Crop Frame camera positioned further away from the subject to allow me to include all the information that was in the original Full Frame image. Even though all the lens settings, with the exception of focus, were unchanged, you can recognize that the new Crop image has a different "feel". Much of the original background blur is gone. As the camera is physically moved away from the subject, the light reaching the sensor is more organized and less difuse in those areas outside the DOF. This is a real loss of control and is a direct result of sensor size.

If you change your subject distance, but use the same focal length and the same focal ratio (f-stop) then you will change the depth of field. That's part of the physics of the optics.

In your original post, I noted that you had changed translated the f-stop range on the lens.

In the case of the 300mm f/4 lens, you translated the f/4 to f/6.4 (multiplying by the 1.6x crop-factor). The focal ratio of the lens will not change.

The notion that the focal ratio would change because the size of the sensor changes is somewhat like believing the full-frame camera has one focal ratio in the center of the image, and a different focal ratio near the edges of the image. Or another way to think about it would be to use a movie projector to shine an image onto a screen of a given size, but then substitute that screen for a slightly smaller screen.... but placed at the identical distance from the projector.

If you do this (substitute the large screen for a smaller one), the image quality will not change nor will the brightness of it. But you will see less of the image because the parts that no longer fit on the small screen will spill off the sides. The part of the image that lands on the screen and does not spill off the sides will not change.

Here' s a test. Stand 10 feet from a friend. Ask the friend to throw a handful of rice at you while you hold the cup out to catch the rice. Count the rice grains or weigh them. Now do the same thing when standing 15 feet from your friend. This time your cup will collect less rice. The reason is that only the rice that is more or less coming straight at the cup will be caught at 15 feet. At 10 feet you catch some of the rice that is scattering every which way as it reaches the cup in addition to the rice that is coming straight on.

I think you are trying to describe the inverse-square law using rice as an example. As light travels farther away from a source, it is spreading out. The amount of light landing in some fixed "unit area" of space (say... 1 square foot) will decrease based on it's distance from that light source. It will exactly "halve" each time the distance from the light source increases by a factor equal to the square root of 2 (√2... approximately 1.4). In other words if we use the light landing on a subject from a light source positioned 5 feet away as our baseline, but now move the light so that it is 7 feet away, the amount of light landing on that subject will be reduced to just half (5 x 1.4 = 7). If we increasse the distance to 10 feet, it will be halved again... and again at 14 feet... and again at 20 feet... and so on.

This isn't really the same example, however. If I hold a hand-held incident light meter in front of my subject and the light meter registers Ev 15 (a typical outdoor "sunny 16" type exposure), then I can use the EV 15 exposure (e.g. ISO 100, f/16, 1/100th sec shutter is an Ev 15 exposure) to photograph that subject regardless of my subject distance or lens focal length or camera sensor size.

(snipped sections for brevity)

Actually the aperture did get physically smaller. Aperture is a function of lens opening and length. You can't maintain the same maximum aperture if you alter the length, which you have by adding the teleconverter, without also enlarging the lens opening, which you can't.

The aperture is a "ratio". An aperture of "f/4" can also be written as 1:4. I can write 1:4 as 2:8... or 25:100. They all express the same ratio. We reduce fractions and ratios to their simplified values.

So... if I have a 25:100 lens (that's a 100mm lens with a 25mm opening ... which works out to an f/4 focal ratio) and I put a 1.4x teleconverter on it, I get a 25:140 lens. Notice how only the denominator changes but the numerator stays the same. And yet... if you divide 25 into 140, you'll notice it goes in 5.6 times. (100 ÷ 25 = 5.6). Or... f/4 x 1.4 = f/5.6

So the physical aperture opening when measured in millimeters does not change. But the focal length does change. But this causes the "ratio" to change... and in this case, it's by exactly one full stop.

The ratio of dividing the same diameter opening into a longer focal length means that the ratio was altered... by exactly one stop.

Tim, if I might make a comment. You appear to give more weight to the aperture having a fixed value when manufactured. But the value is not the same thing as ability to capture light. Its meaning as a ratio is tied to the "35mm" image circle it projects. You can't capture as much light, at maximum aperture, with a smaller sensor.

You'll want to use equal "unit areas" when making the evaluation. Ignore the size of the sensor... just take the same "unit area" of sensor (such as the square centimeter.)

If you calculate the "entire area" of both sensors and decide that since the smaller sensor receives less light (by a factor of 1.6) ... so you decide to compensate for that by increasing the exposure... you'll notice that you now have a farily over-exposed image and will likely not be satisifed with that result.

Here's a test. Hold two measuring cups* of the same size together and stand out under a sprinkler for a few minutes. Now measure how much water you captured. Repeat the same with a single measuring cup, same size as before. Measure how much you captured after the same amount of time. I guarantee that you will capture more water with two cups than you would with one.

Let's continue with this analogy and apply some abstract thinking by extending your metaphor. Suppose we have a plant which is extremely sensitive to the amount of water it receives. If the plant recieves too little water, it will die of thirst. If it receives too much water, it will drown and die. It will live and thrive if it receives the correct amount of water. Suppose that "correct" amount of water happens to be 2 ounces.

If we have one plant living in each cup and we take away one of the planted cups, our remaining plant will receive the same required amount of water. The "cup" only collects the water falling within the rim of that cup. That's our "unit area". The cup and plant do not know if there are other potted plants living in cups nearby.

If, on the other hand, we realize that we were watering the cups until they received 2 ounces of water... but since we've removed one cup, we decide to give the remaining cup 4 ounces of water (to compensate for the cup we took away), we'll end up over-watering our plant.

Maximum aperture is nothing more than a measure of how much light you can capture for a given focal length. You will never be able to capture the same amount using fewer cups.

* Measuring cups are a used to represent the area of the sensor. I.E. a two-cup sensor has more area than a one-cup sensor. This has nothing to do with the number of "cups" that any given sensor may contain because it is widely know that sensor cell-size can differ.

This is why you want to use a "unit area". Yes.. the total sensor size of the APS-C sensor is smaller and that means that the "total" amount of light may be less... but the light "per unit area" is identical. You do not want to change the exposure unless you want to over-expose the image.

I hope this was able to resolve the confusion (because it was a lot of typing if it didn't help).

The change between "full frame" and "crop frame" isn't really all that mysitcal.

When you swtich a lens from between a crop-frame vs. a full-frame sensor camera, the only thing you change is the "angle of view" -- the physics of the light passing through the lens are still the same.

Since the angle of view has changed, the photographer may choose to use a different focal length or may choose to stand at a different camera-to-subject distance... but these are changes in the photographer's behavior... not changes in the physics of the light passing through the lens brought on by the use of a different sensor size.

5D III, 5D IV, 60Da

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-29-2014 09:23 PM - edited 07-29-2014 09:25 PM

Tim, I know about the cropping but where do you get the enlarging? The output size is not the same because there simply is no information from which to make it the same size. This is a function of the sensor size, not the lens.That's why I use the words "... performs as..."

If you want an output image of a given size (e.g. a 4 x 6" photo for example), then you'll need to enlarge the image from the crop-sensor camera more than the image from the full-frame camera in order to achieve that size.

The image on the crop sensor sensor, all other things being equal, will occupy the exact same area as it does on the full frame sensor. The main difference is that the full frame photo also adds image to the surrounding areas. Using B&W printing as an anology ou could put both images in a negative carrier, print the 35mm negative to a full 8x10, the smaller negative (that of the APS-C image) to the same 8x10 paper without altering the distance from the carrier to the paper holder and you would get the exact same size image. It would look smaller but only because it is missing all the information on the outside.

At maximum aperture, which is what we're interested in, the Full Frame sensor will be filled by the image projected by an EF lens. When mounted to a crop sensor body, this same lens is simply incapable of delivering as much light to the sensor as before.

To avoid confusion... the amount of light delivered into the camera body is identical. The amount of light which lands on the sensor when using equal unit-areas of center (e.g. pick one square centimeter for example) would be identical.

Yeah, like I said..."(the) lens is incapable of delivering as much light to the sensor as before." The words "as before" referring to the time before you moved the lens to the crop sensor body.

Each invididual photo-site (or "pixel" if you want to think of it as a debayered image) does not know how many neighboring photosites it has and whether they are receiving light or not. It only knows how much light it is receiving.

This is a moot point Tim. I don't care it you're photographing a white sheet and all the photosites are maxxed out, the fact of the matter remains that you can't receive a much light because it is a physically smaller display. It doesn't matter how many photosites you put on the array, if the light is not projected onto the sensor it isn't recorded. (Did you look at the composite image I posted where the extra light the full frame camera receives is vividly illustrated. I'll post it again.)

In the composite image below the cat on the left was shot using a Full Frame Camera with a 50mm lens at f/2.8 1/1000 sec 100 ISO. The cat on the right was shot using a Crop Frame Camera with a 50mm lens at f/2.8 1/1000 sec 100 ISO. The distance to the subject remained fixed by way of a tripod. Note that the image on the left contains all the information (light) as the image on the right AND it includes additional information on all sides.

Even though the lenses were not identical the loss of control is evident because the Crop Frame image (right) is maxed out in terms of its ability to gather light. But I could move further away from the subject with the Crop Frame camera to achieve the same information as is captured by the Full Frame camera. Unfortunately doing so would cause a loss of control.

I'm not sure what you mean by "loss of control". If the photographer changes their subject distance to compensate for the different angle of view, they are simply recomposing the shot. I'm not sure that's a "loss" ... it's just "different".

Control can be the ability to choose just the right mix of photons to include in an image. Remember the test where your friend threw rice for you to catch in your cup? You could only get the more directly vectored rice when standing 15" back but you could catch the same and more when you stood closer. The whole point of that "test" is to illustrate what happens when you move away from your subject. Moving away means that you will receive more organized photons (a nearly parallel stream) than you will of the scattered photons which can be captured as blur, for lack of a better word.

This is not a small matter. In portraiture, I might want to isolate my subject by using a very narrow DOF. I will not be able to achieve the same narrow DOF when I reduce the sensor size because I will have to move back to get the same shot. But I need the scattered photons which are closer to my subject and produce the beautiful diffused background that isolates the subject in the image.

Tim this is also why medium-format may take off as a camera choice for near-pros and the very well-healed. As the sensor size continues to grow, the lens collects even more of the scattered photons and delivers even control over DOF. Medium-format has been the mainstay of fashion photography because the top fashion photographers understand light and they choose tools that allow them to control the light best.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-29-2014 09:23 PM - edited 07-29-2014 11:22 PM

The image below was shot with the Crop Frame camera positioned further away from the subject to allow me to include all the information that was in the original Full Frame image. Even though all the lens settings, with the exception of focus, were unchanged, you can recognize that the new Crop image has a different "feel". Much of the original background blur is gone. As the camera is physically moved away from the subject, the light reaching the sensor is more organized and less difuse in those areas outside the DOF. This is a real loss of control and is a direct result of sensor size.

If you change your subject distance, but use the same focal length and the same focal ratio (f-stop) then you will change the depth of field. That's part of the physics of the optics.

If I don't change anything but distance my DOF will move with me.

In your original post, I noted that you had changed translated the f-stop range on the lens.

In the case of the 300mm f/4 lens, you translated the f/4 to f/6.4 (multiplying by the 1.6x crop-factor). The focal ratio of the lens will not change.

Here's where your confusion is rooted. We are agreed that the lens doesn't change but the amount of light captured by a full frame camera is significantly more than that captured by a crop sensor camera. You can reframe the image by moving away from the subject but doing so reduces your ability to capture the light that is close to the image. (You moved away from the spot where the full frame photographer is positioned and "out" of the really good light.) You want the light that is close to the image because it gives you control to isolate your subject (assuming that's your interest) and it means the lens is effectively slower on the crop format.

The notion that the focal ratio would change because the size of the sensor changes is somewhat like believing the full-frame camera has one focal ratio in the center of the image, and a different focal ratio near the edges of the image. Or another way to think about it would be to use a movie projector to shine an image onto a screen of a given size, but then substitute that screen for a slightly smaller screen.... but placed at the identical distance from the projector.

If you do this (substitute the large screen for a smaller one), the image quality will not change nor will the brightness of it. But you will see less of the image because the parts that no longer fit on the small screen will spill off the sides. The part of the image that lands on the screen and does not spill off the sides will not change.

Yeah we, covered this. In agreement. But can the lens capture the light you need using that smaller sensor?

Here' s a test. Stand 10 feet from a friend. Ask the friend to throw a handful of rice at you while you hold the cup out to catch the rice. Count the rice grains or weigh them. Now do the same thing when standing 15 feet from your friend. This time your cup will collect less rice. The reason is that only the rice that is more or less coming straight at the cup will be caught at 15 feet. At 10 feet you catch some of the rice that is scattering every which way as it reaches the cup in addition to the rice that is coming straight on.

I think you are trying to describe the inverse-square law using rice as an example. As light travels farther away from a source, it is spreading out. The amount of light landing in some fixed "unit area" of space (say... 1 square foot) will decrease based on it's distance from that light source. It will exactly "halve" each time the distance from the light source increases by a factor equal to the square root of 2 (√2... approximately 1.4). In other words if we use the light landing on a subject from a light source positioned 5 feet away as our baseline, but now move the light so that it is 7 feet away, the amount of light landing on that subject will be reduced to just half (5 x 1.4 = 7). If we increasse the distance to 10 feet, it will be halved again... and again at 14 feet... and again at 20 feet... and so on.

Didn't know what I was describing, so that's neat to know. Doesn't this suggest to you that the "quality" or "organization" of the light is different depending on how close you are to the subject? Why recomposing isn't the solution for tough photography assignments?

This isn't really the same example, however. If I hold a hand-held incident light meter in front of my subject and the light meter registers Ev 15 (a typical outdoor "sunny 16" type exposure), then I can use the EV 15 exposure (e.g. ISO 100, f/16, 1/100th sec shutter is an Ev 15 exposure) to photograph that subject regardless of my subject distance or lens focal length or camera sensor size.

Your light meter might offer a good guage of intensity but won't tell you much about the quality of the light. Light flows, reflects, is scattered and can be intensly direct. What was the point of buying the 50mm L if I could get the same effect with the 50mm f1.8? I wanted the fast lens that allows me to capture the photons in their biggest "flood" into my camera. They don't flood-in as well with the f1.8.

(snipped sections for brevity)

Actually the aperture did get physically smaller. Aperture is a function of lens opening and length. You can't maintain the same maximum aperture if you alter the length, which you have by adding the teleconverter, without also enlarging the lens opening, which you can't.

The aperture is a "ratio". An aperture of "f/4" can also be written as 1:4. I can write 1:4 as 2:8... or 25:100. They all express the same ratio. We reduce fractions and ratios to their simplified values.

No disagreement. I confirmed this earlier and, for my purposes at least, no need to repeat yourself.

So... if I have a 25:100 lens (that's a 100mm lens with a 25mm opening ... which works out to an f/4 focal ratio) and I put a 1.4x teleconverter on it, I get a 25:140 lens. Notice how only the denominator changes but the numerator stays the same. And yet... if you divide 25 into 140, you'll notice it goes in 5.6 times. (100 ÷ 25 = 5.6). Or... f/4 x 1.4 = f/5.6

So the physical aperture opening when measured in millimeters does not change. But the focal length does change. But this causes the "ratio" to change... and in this case, it's by exactly one full stop.

You forgot to mention that the lens and aperture you describe projects a 35mm-size image. That's great if there's a 35mm-size sensor to receive it. If the sensor is a crop sensor you will not capture as much light and will have to move away from the subject to reframe the image. This effectively changes the photons you are able to capture and diminishes control.

The ratio of dividing the same diameter opening into a longer focal length means that the ratio was altered... by exactly one stop.

Tim, if I might make a comment. You appear to give more weight to the aperture having a fixed value when manufactured. But the value is not the same thing as ability to capture light. Its meaning as a ratio is tied to the "35mm" image circle it projects. You can't capture as much light, at maximum aperture, with a smaller sensor.

You'll want to use equal "unit areas" when making the evaluation. Ignore the size of the sensor... just take the same "unit area" of sensor (such as the square centimeter.) Okay.

If you calculate the "entire area" of both sensors and decide that since the smaller sensor receives less light (by a factor of 1.6) ... so you decide to compensate for that by increasing the exposure... you'll notice that you now have a farily over-exposed image and will likely not be satisifed with that result.

Here is a point of confusion. I don't disagree with you regarding the intensity of the light that is reaching the sensor, just the quality. I won't step back to reframe my shot because I'll lose my good light.

Here's a test. Hold two measuring cups* of the same size together and stand out under a sprinkler for a few minutes. Now measure how much water you captured. Repeat the same with a single measuring cup, same size as before. Measure how much you captured after the same amount of time. I guarantee that you will capture more water with two cups than you would with one.

Let's continue with this analogy and apply some abstract thinking by extending your metaphor. Suppose we have a plant which is extremely sensitive to the amount of water it receives. If the plant recieves too little water, it will die of thirst. If it receives too much water, it will drown and die. It will live and thrive if it receives the correct amount of water. Suppose that "correct" amount of water happens to be 2 ounces.

If we have one plant living in each cup and we take away one of the planted cups, our remaining plant will receive the same required amount of water. The "cup" only collects the water falling within the rim of that cup. That's our "unit area". The cup and plant do not know if there are other potted plants living in cups nearby.

This affirms the truth that the intenity of the light is the same, but ignores the fact that you only got one plant, not two. You would have gotten two plants have you used "full-frame". 🙂

If, on the other hand, we realize that we were watering the cups until they received 2 ounces of water... but since we've removed one cup, we decide to give the remaining cup 4 ounces of water (to compensate for the cup we took away), we'll end up over-watering our plant. Lost me.

Maximum aperture is nothing more than a measure of how much light you can capture for a given focal length. You will never be able to capture the same amount using fewer cups.

* Measuring cups are a used to represent the area of the sensor. I.E. a two-cup sensor has more area than a one-cup sensor. This has nothing to do with the number of "cups" that any given sensor may contain because it is widely know that sensor cell-size can differ.

This is why you want to use a "unit area". Yes.. the total sensor size of the APS-C sensor is smaller and that means that the "total" amount of light may be less... but the light "per unit area" is identical. You do not want to change the exposure unless you want to over-expose the image.

I hope this was able to resolve the confusion (because it was a lot of typing if it didn't help).

I hope your confusion is now cleared up regarding why sensor size matters when you want to control the DOF.

The change between "full frame" and "crop frame" isn't really all that mysitcal.

And yet you completely missed the important point of what the different size sensors allow you to do.

When you swtich a lens from between a crop-frame vs. a full-frame sensor camera, the only thing you change is the "angle of view" -- the physics of the light passing through the lens are still the same.

Not. You can't get the same DOF with a crop sensor because you are constantly moving back from the image to reframe an consequently moving further from the "good" light.

Since the angle of view has changed, the photographer may choose to use a different focal length or may choose to stand at a different camera-to-subject distance... but these are changes in the photographer's behavior... not changes in the physics of the light passing through the lens brought on by the use of a different sensor size.

The light doesn't change, just the ability to get the right or good light.

This evening, light provided the perfect DOF opportunity and it flooded-in.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-29-2014 11:20 PM

Hi Cale,

This has gotten much too long and I suspect most people wont bother to read it.

I noticed we are, in principle, agreeing... but you're using a different vocabulary.

I did mention (both in this thread and in others) that while the physics of the lens don't change when you change sensor sizes, the photographer's behavior will likely change (but that's a choice being made... not a law of physics). That behavioral difference (e.g. when using a full-frame camera you can either use a longer focal length OR you can choose to stand closer to your subject) may reduce the depth of field and increase the intensity of background blur. It is important to emphasize that this is the result of a decision made by the photographer to change the way they chose to capture the shot -- and not a fundamental change in the physics of the camera lens due to swapping the camera body.

The amount of light received by the camera does not change. The meter reading will not change. The size of the image circle and being projected into the camera body will also not change. The only thing that changes is that the resulting image will have a narrower angle of view on the crop-sensor body.

The post that motivated my intiial response was the post in which you translated the f-stop by the crop factor. This was a point that would cause confusion (and is factually incorrect -- no matter how you look at it.) I do hope we now agree on that point.

5D III, 5D IV, 60Da

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-29-2014 11:50 PM

Tim, IMHO, you are being preposterous because you give far to little regard for the basic fact that grabbing more light matters and the ability to control the light you capture matters a great deal. Where would L lens sales be if it wasn't for fast glass?

Putting a f/1.2 on a 7D is a waste, IMHO because moving back is not a solution. It is a compromise and a compromise is something camera owners should be aware of when considering the labeling conventions adhereed to by the camera manufacturers.

Don't bother complaining that its been too wordy. You apparently require more words than most. ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-30-2014 12:15 AM

Cale,

We can't have a discussion on this if you keep changing the subject.

You mentioned earlier that changing the camera body changes the focal ratio (it does not).

Then that morphed into the notion that changing the sensor size changes the amount of lens collected by the camera (only if you attmept to think of it as "total area" and not "unit area"... but exposure values -- which is what we really care about in photography -- are based on "unit area" and not "total area'. You would not take a shot thinking... I'll crop that in later on my computer so I better over-expose the shot to compensate for the fact that the "cropped" area will have less total light. In such a situation you would, of course, not change the exposure... because there is no difference in light. Crop factor does not matter when it comes to focal ratios and exposure values.

But now you're talking about using completely different lenses??? What does using an f/1.2 lens have to do with with a discussion around the 100-400mm f/4.5-5.6L???

At first I thought you were confused on the topic and would welcome the clarification... but as the thread grows it looks like you are mostly just interested in having a debate.

This isn't really a subject of "debate". A "debate" would be appropriate if we were discussing a controversial topic and the facts behind it were not necessarily well-understood and we needed to explore the aspects of the argument. This is an area of physics which is extremely well understood.

@cale_kat wrote:

Don't bother complaining that its been too wordy. You apparently require more words than most.

The physics that control the behavior of light through the lens and the exposure values... these aren't my ideas. I didn't invent this. Please don't debate this with me. You don't need to read how it works from me... you can do the research and learn this elsewhere.

That is to say... I don't require any words. You needn't try to explain on my account.

5D III, 5D IV, 60Da

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-30-2014 12:32 AM

OMG Tim, I said the effective f stop. Quit blaming me for your misunderstanding.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-30-2014 12:59 AM - edited 07-30-2014 01:27 AM

@cale_kat wrote:OMG Tim, I said the effective f stop. Quit blaming me for your misunderstanding.

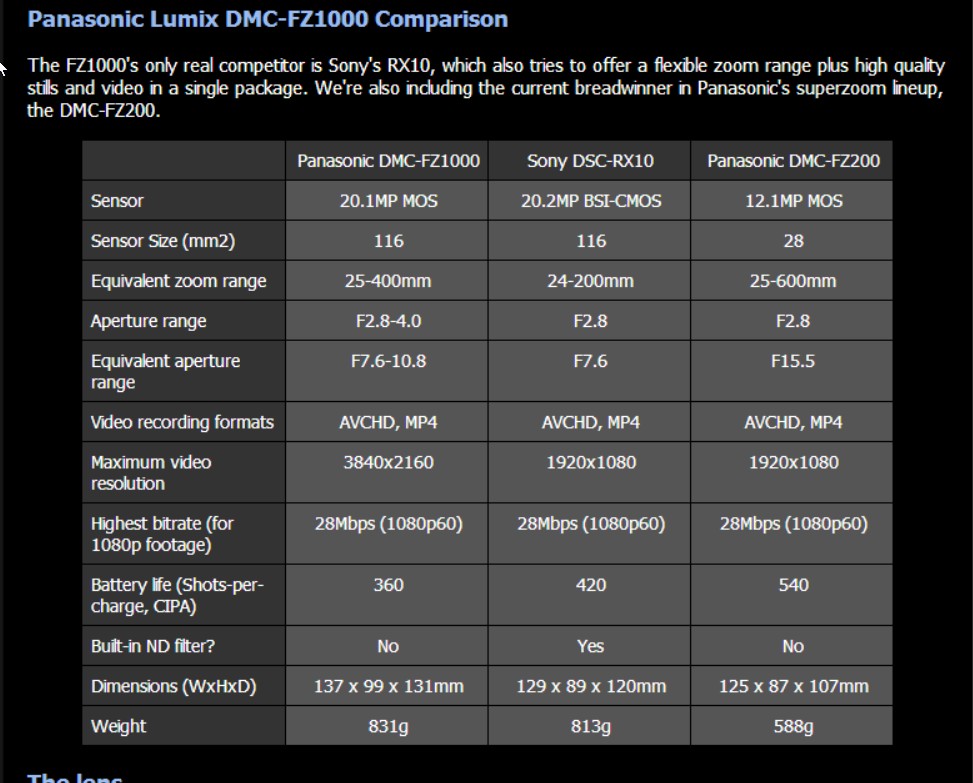

Is it you who could use some brushing up? Note that the review attached for the Panasonic DMC-FZ1000 features a camera with a advertised lens capable of 9.1- 146mm f/2.8-4.0. That sounds really good! Imagine the beautiful bokeah you'll be able to get at 9mm with a 2.8mm aperture. All this for less than $1,000. Tim are you one of those people that believe everything you read? (Try buying a Canon lens that fast, let alone one that will only give an f stop in the course of a 16X zoom. Sigma sells a nice 15mm f2.8 for a bit over $600 street. Seems a bit high considering that for not that much more, the Panasonic includes a camera body and a 16X zoom.)

The review at DPReview, notes something important that I don't want you to miss. The focal range is converted from a factory certified f/2.8-4 to an equivalent f/7.6-10.8. Whoa! That's a big difference. I mean the f2.8-4.0 is printed right on the lens. How could this change to f7.6-10.8 come about? I think it has something to do with the size of the sensor.

Linky: http://www.dpreview.com/reviews/panasonic-lumix-dmc-fz1000

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-30-2014 01:35 AM

@cale_kat wrote:OMG Tim, I said the effective f stop. Quit blaming me for your misunderstanding.

Cale, there is a concept called "effective focal length" (and you have to be careful when you use it -- it is frequently mis-understood.

There is no such thing as an "effective f-stop".

An f-stop (aperture value) is part of the exposure value. If you change it, you change the exposure and would have to compensate by adjusting something else (shutter speed or ISO).

Using terminology such as "effective focal length" is valid (even though inaccurate) because what the photographer REALLY wants is an "angle of view" (experienced photographers tend to think more in terms of angle of view when they change the lens and not because they're trying to use the zoom as a way of avoding having to walk closer or farther from a subject.) When one says that a lens has an "effective focal length" of ... say 50mm, then what they really mean is that it provides an angle of view comparable to what a 50mm lens would have provided on a full-frame camera.

But use of a term such as "effective f-stop" is simply wrong. There is no such thing. Attempts to use such terminology are mis-informed, confused, and misleading (at best).

I've noticed you trying to use it as a way of saying that it represents a change in a depth of field... but that's a terrible description because f-stop alone does not describe depth of field. To define a depth of field you must have three elements... (1) focal length, (2) focal ratio, and (3) focused subject distance. There is simply no such thing as an "f/2.8 depth of field" for example.

If we pick on the Canon EF 14mm f/2.8L ...and using f/2.8 we focus the lens to merely 10 feet... we get a 54 foot depth of field. The hyper-focal distance on that lens is 12 feet -- at which point everything from 6' to infinity is in focus.

In contrast... if we look at an EF 300mm f/2.8L IS... iand still using f/2.8 and a focus distance of 10'... the depth of field is merely 0.03'. That lens has a hyper-focal distance of more than 1 mile (5495 feet) at which point everything from about half a mile to infinity are in focus.

This is because focal ratios alone are not a description of depth of field without also including a focal length and subject focused subject distance. A focal ratio is properly (and consistently) a measure of light-gathering capability. That will remain the same regardless of the focal length of the lens and focused distance (there are technically some exceptions to this... but few.)

If you're going to use abstract comparisons and analogies... it's important that the analogies be accurate. Otherwise you end up creating confusion (and it seems as if you've managed to confuse yourself in the process.)

5D III, 5D IV, 60Da

04/16/2024: New firmware updates are available.

RF100-300mm F2.8 L IS USM - Version 1.0.6

RF400mm F2.8 L IS USM - Version 1.0.6

RF600mm F4 L IS USM - Version 1.0.6

RF800mm F5.6 L IS USM - Version 1.0.4

RF1200mm F8 L IS USM - Version 1.0.4

04/04/2024: Join us at NAB and use code NS3684 to register for free!

03/27/2024: RF LENS WORLD Launched!

03/26/2024: New firmware updates are available.

EOS 1DX Mark III - Version 1.9.0

03/22/2024: Canon Learning Center | Photographing the Total Solar Eclipse

02/29/2024: New software version 2.1 available for EOS WEBCAM UTILITY PRO

02/01/2024: New firmware version 1.1.0.1 is available for EOS C500 Mark II

12/05/2023: New firmware updates are available.

EOS R6 Mark II - Version 1.3.0

11/13/2023: Community Enhancements Announced

09/26/2023: New firmware version 1.4.0 is available for CN-E45-135mm T 2.4 L F

08/18/2023: Canon EOS R5 C training series is released.

07/31/2023: New firmware updates are available.

06/30/2023: New firmware version 1.0.5.1 is available for EOS-R5 C

- Canon EF 24-104L pair with a R8 body in EF & RF Lenses

- PowerShot ELPH 360 HS Memory Card Error in Point & Shoot Digital Cameras

- Advice for a new canon shooter - EOS R8 in EOS DSLR & Mirrorless Cameras

- Can R6 MkII wirelessly control two 580EX off camera that uses optical tech? in Speedlite Flashes

- EF-S 55-250mm Focus switch on lens stuck in EF & RF Lenses

Canon U.S.A Inc. All Rights Reserved. Reproduction in whole or part without permission is prohibited.